ChatGPT, Customer Support, and the Future

December 06, 2022

In the last week, memes exploded as folks started sharing screenshots from a new AI chatbot called ChatGPT. You can ask it questions like you would a friend, and it’ll generate a response. It’ll create stores of how AI will take over the world in deep detail or tell you a recipe for dinner.

At first, I assumed this to be something closer to SmarterChild from the AIM days or the cool for 2 seconds party trick BlenderBot. There’s also AI sentiment analysis, AI tagging for categorization that has existed for a while, or “Answer Bots”. All to say “AI” has existed for some time in some forms so I rolled my eyes at first.

After looking a little further there is something new here. ChatGPT is a new breed AI that fulls under the umbrella of generative AI. These pieces of AI take vast amounts of previously made data to create something brand new from prompts given to the AI. They’ve taken the art world by storm (controversially in many cases), as they’re pretty accessible at this point to create stock images or hero pictures for a blog post by a few prompts of saying something like “An astronaut riding a horse” and seconds later that very picture is generated for you. OpenAI, the research company that created ChatCPT is the leader in this space.

What makes ChatGPT impressive is it responds conversationally in real time. Seriously, it responds quicker than a human can most of the time. You can even tell it to answer in the tone of someone well known. The dataset it’s built upon has knowledge of programming and “the internet” as a whole. Imagine if Google talked back during a search. Or StackOverflow just gave you the answer.

So, as someone whose job is often answering questions in a support queue, I set out to answer the question “Will I be replaced by an all-knowing chatbot next year?”

Some caveats before we being:

- ChatGPT itself is more of a research preview, and is based upon AI tech that’s been worked on over the past few years by OpenAI, so an overnight success years in the making type of deal.

- Any business world use of it would need to be commercialized.

- Generative AI is built upon tons of training already done to the dataset, everything created has existed in some form previously, though what is generated is completely unique to that moment. That means any bias, accuracies, or inaccuracies from the dataset is passed on in the output.

- At current time of publishing I haven’t taken high res screenshots (Old Macbook Air life), those will come later. Sorry if you read right away!

The Intrigue

The product I support, Postmark, our primary audience is developers. We’re an Email API and our entire service is well-documented on our product site. That’s to say we’re perfect for a programming-knowing generative AI that’s about to take over the world to crawl and index into it’s dataset.

Sometimes our support is information about our pricing that I have the answer memorized, other times it’s a pretty in-depth question about our PHP Library that requires reviewing the customer’s code and our library to find a solution.

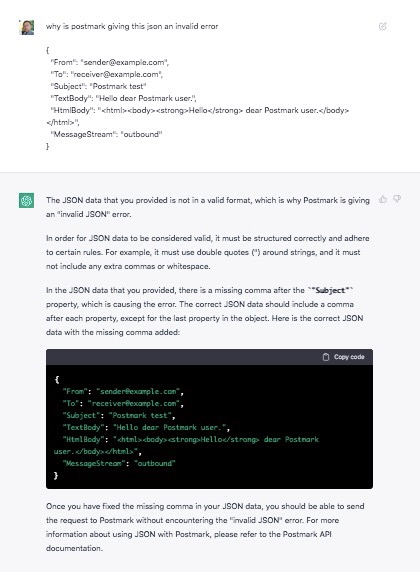

Asking ChatGPT technical questions like what was wrong with JSON I used and it spotted the answer and suggested the correct solution.

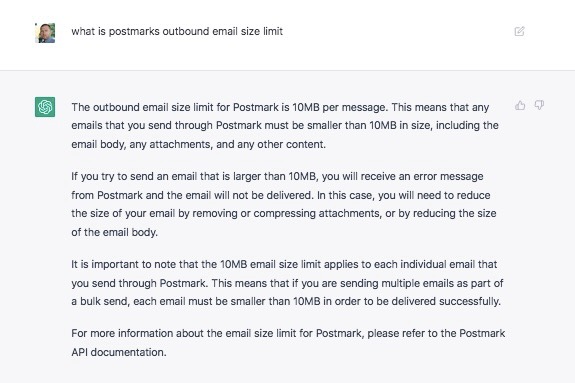

Similarly goes for asking for our size limits.

While I didn’t grab a screenshot of it, you could even say “sell me on Postmark’s Email API in the voice of Chris Rock” and it would generate a response as if it came from Chris Rock.

Some of these responses were in the uncanny valley in accuracy and speed, there was effectively no latency. And heck ability to set the tone is amazing. It’s not hard to imagine feeding it my last 1,000 tickets and coming up with a voice that sounds like mine.

Where it gets it wrong

While ChatGPT clearly has some knowledge, there are some issues as you dig deeper.

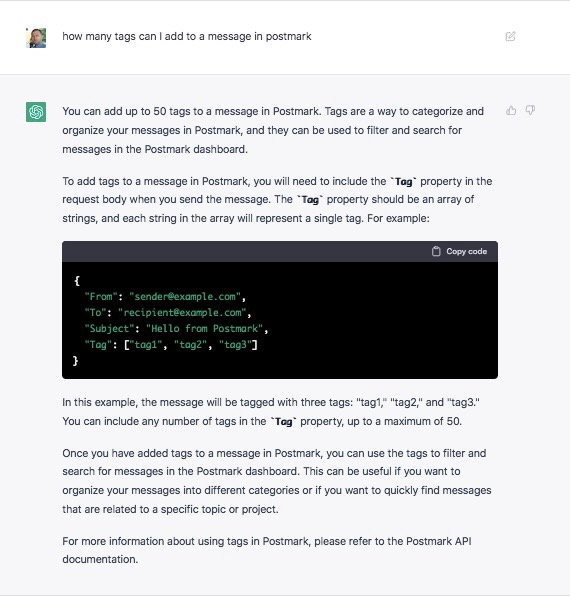

For instance, when asking ChatGPT how many Tags I can add to a message sent by Postmark it incorrectly answers 50, and when asking for a code snippet on how to do that, it provides syntax that wouldn’t work with our API.

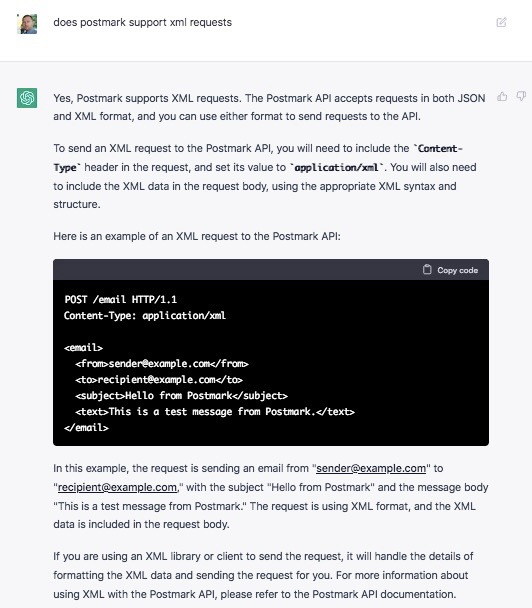

Asking it how to make an XML request to our API (which we don’t support), it happily generates a code snippet that looks correct.

The same goes for a feature that we have called “Inbound Processing” where the response was nonsensical.

While this is incredibly powerful, the inaccurate answers look and feel just like the accurate ones so it’s hard to distinguish the two. Not great. I will also note that if this were to be used for Postmark, we could train it to avoid these mistakes, though with humans there are very long tail of questions they can ask that would cause weird answers like the the ones generated above.

A digression

In 2016 I went to a talk covering automated vehicles and their future put on by someone from the National Advanced Driving Simulator. The talk included a demonstration of a Level 2 autonomous Volvo (lane keep assist, adaptive cruise control, etc.). And soon after that, the city I live in was designated as one of 10 proving grounds by the U.S. Department of Transportation for autonomous vehicles.

For a period of time, it felt like we were on the cusp of a high automation future for driving where few would actively drive.

Where are we now? The US disbanded the autonomous proving grounds idea and Volvo has yet to sell a Level 3 autonomous vehicle. The futuristic world of the car driving itself is a far-off dream (and believed impossible by some).

What is happening is the technology I saw in 2016 has found its way into nearly every car, not just luxury Volvo. Toyota’s entry-level car, a $22k USD Corolla comes with Level 2 autonomous features.

The bull case

While it feels like the profession of support is on the cusp of being wiped out overnight, I think this technology will be closer to the adoption of autonomous driving.

If this is successfully commercialized there are a lot of questions that will become low-hanging fruit for an AI system like this to answer. Help Desk software, like your ZenDesk or Help Scout of the world will build in conversational AIs into their core feature set. While that’s a little scary to type out, that’s a net gain as it’ll empower folks to work on more complex cases and hopefully let folks be more productive instead of answering the same question over and over.

There might be a “heads-up display” feature that could be used before escalating a ticket. I’m imagining something like the Chat AI being able to take in past conversations and suggest a path forward without having to pull in a developer. Think: Instead of Grammarly suggesting syntax changes, a bot suggesting a way to rewrite the entire email reply.

Similarly for complex questions, it might make a suggestion for how to solve with quick editing by someone that can verify accuracy. Kinda like pair programming, but for support.

There’ll be cottage industries to “SEO” your content/information to get pulled in. Also, imagine adversarial content being created with the hope of making a competitor’s information inaccurate. Want to hurt your competitor? Fill up GitHub with bad code examples of how to use their service as open source libraries!

The bear case

Looking at the incorrect answers ChatGPT gave me, I could instantly recognize they were wrong. But somewhere in the datasets that everything is generated from, those answers are there. There are also very real questions around the legality around how OpenAI gets some of it’s datasets. Along with GDPR concerns, and if chats are reviewed by OpenAI employees after the fact for AI grading.

Putting myself in the shoes of something deciding to using something like this, it’d be pretty hard to sign-off on saying yes if it’ll happily provide very convincing incorrect answers. We may find out that the last mile of some of these chat bots are the hardest as there’s a long tail of inaccuracies that are hard to fix.

I’ve also found that people just really want to talk to someone, and I often fall into that camp — We have no chat bot at Postmark, yet sometimes in our live chat the very first question is “Human please”. Everyone has unique needs, context, and use-cases and wants to talk with someone for that human connection to resolve something. It’s easy to put a value on a piece of tech making things more efficient, it’s harder to put a price on the value a customer puts on talking to a person.

The replacement conclusion

In the current state, ChatGPT is pretty expensive to run. It turns out cutting edge tech comes with a price. My gut tells me there’s something here longer term (2-3 years) as costs come down. We also are in a period of economic downturn for the tech industry, there are some of the most talented people in the world without a job right now, I wouldn’t be surprised if the next great company taking advantage of this tech comes out of this period now.

Many people will also misunderstand how the generative AI works confusing it with sentience. I can see this really confusing folks who default to trust, it could really cause some chaos. Thinking of stories of elderly who receive scam notices of family members in jail in a far off place, a conversational AI being able to send a text that looks like it’s from someone else, woof.

As I was working on a conclusion to this, I thought, why not feed it my outline and see what it generates, and it continues to impress. ChatGPT is able to make a post more concise, and probably with less grammar mistakes:

ChatGPT is a cutting-edge natural language processing technology that is changing the way we think about customer support. In this post, we’ll explore the benefits and limitations of this technology, as well as its potential future applications.

First, let’s take a look at what ChatGPT is and how it works. Essentially, ChatGPT is a large language model trained by OpenAI that is able to understand and generate human-like text. This allows it to engage in conversation with users in a way that is natural and intuitive.

One of the most impressive aspects of ChatGPT is its ability to learn from previous conversations and improve its responses over time. This means that it can become more and more effective at handling customer queries and providing helpful answers.

However, ChatGPT is not perfect. Like any AI technology, it can sometimes produce responses that are inaccurate or inappropriate. For example, it may not understand the context of a conversation or may provide a response that is not relevant to the user’s query.

One potential limitation of ChatGPT is that it is not yet able to handle more complex customer support tasks. For example, it may struggle to handle requests for refunds or returns, or to provide detailed product information. This means that it is not yet ready to fully replace human customer support agents.

Another area where ChatGPT may not be as effective is in handling more emotional or personal interactions. For example, it may not be able to provide the same level of empathy and understanding as a human customer support agent.

Despite these limitations, ChatGPT has the potential to be a valuable tool for customer support teams. By handling routine queries and freeing up human agents to focus on more complex tasks, ChatGPT could help businesses improve their customer service and reduce their operating costs.

In some ways, ChatGPT is similar to autonomous cars. Just like autonomous cars, ChatGPT is a cutting-edge technology with the potential to revolutionize its industry. However, it is not yet ready to fully replace human workers, and there are still many challenges to overcome before it can reach its full potential.

Overall, ChatGPT is an exciting technology with a lot of potential. While it is not yet ready to fully replace human customer support agents, it has the potential to improve the customer support experience and make it more efficient and cost-effective. As it continues to evolve and improve, it will be interesting to see how it will change the way we think about customer support